NEIRetinalRegistration

This topic is a stub. The HTML help for NEI Build MP Maps algorithm can be found here [1]

Screening for low macular pigment (MP) and monitoring supplementation requires robust methods to estimate the optical density of the MP. The method includes the measurements of MP that are performed by analysis of autofluorescence (AF) images and building the MP maps.

The technique, which is implemented in the MIPAV "NEI Build MP maps" plug in, uses AF images with two excitation wavelengths (yellow and blue) to calculate the MP optical density distributions over a central eight fifteen degrees diameter area radius centered on the fovea (in this plugin defined to be the center of mass of macular pigment optical density within the selected VOI, in typical cases of centro-symmetric pigment distributions, this corresponds to the maximum value of pigment that is anatomically typically at the center of the fovea (TBD). Background

The algorithm takes as an input two groups of retinal images acquired at two different excitation wavelengths, and creates MP maps that show the distribution of macular pigment over the retina. The input images are obtained with a standard Fundus camera for retinal photography, equipped with an autofluorescence modality. Images are captured using different wavelength filters - yellow and blue (defined in Appendix I, TBD) and have a fixed black background. It is important for the user to know the weighted average of the normalized macular pigment absorption coefficient in the excitation.

Typical values for NEI filter sets are provided in Table ## (see Appendix I, TBD)

Contents

Outline of the method

- The plug in takes two directories of retinal images - one with "yellow" images and another one with "blue" images.

- The images from these directories are then converted to 32-bit.

- Images are, then, registered to a common reference image. Registration employs the assumption that in all images pixels are in the same physical location on the retina. Therefore, it is possible to measure the amount of macular pigment in the fovea over time, comparing the pigment's absorption over time and how vitamins and other supplements quantitatively affect the retina, in addition to monitoring correlations between macular pigment levels and other microscopic changes in disease state and other local quantities such as lipofuscin levels and drusen.

- The MP maps are, then, built for registered images using the user defined values for maximum and minimum intensity and relative extinction coefficients.

- The MP map average and standard deviation images are also created.

- All output images are converted back to 16-bit.

- The method also provides the user with the opportunity to analyze the MP map using the user defined VOI(s).

Registration

In order to perform analysis on any retinal images, the pixel intensities on the spatial domain of the image must correlate to the actual physical domain on the participant's eye.

Because of the acquisition process, very often retinal images are non-uniformly illuminated, and therefore exhibit local luminosity and contrast variability. This may seriously affect the algorithm performance and its outcome. To contest the pigment's light absorption, images from the "blue" and "yellow" groups and a reference image are, first, processed to emphasize vascular regions, and then, registered using vascular structures as reference points. To accentuate vascular regions, the algorithm applies gradient magnitude combined with Gaussian (with a scale of 6 by 6) to "blue" and "yellow" images and to the reference image.

The algorithm removes the black border from all gradient magnitude images leaving the eyeballs that will be used for registration. The algorithm registers gradient magnitude images to the reference image (also gradient magnitude). Registration is performed using the AOR algorithm (see MIPAV, Volume 2, Algorithms, "Optimized Automatic Registration 3D") with the following parameters:

- A rotation angle sampling range is set to -10 to 10 degrees;

- A coarse angle increment of 3;

- A fine angle increment of 2;

- The degrees of freedom are set to 3 (as for Rigid transformation);

- The correlation ratio cost function is used.

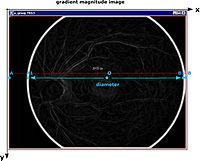

Removing the black border from images

To extract the eyeball part of the image for registration, the algorithm removes the black border from the gradient magnitude (GM) images using the following technique:

- In the GM image, it finds the most left pixel A with coordinates (0, imageHeight/2);

- Then, it searches from the pixel A towards the center of the image until it finds the first non background pixel A1 on the image's left side. The search is done based on the hardcoded threshold value;

- The algorithm finds the most right image pixel B with coordinates (imageWidht, imageHeight/2);

- It searches from the pixel B towards the center of the image until it finds the first non background pixel B1 on the image's right side;

- The algorithm connects these points A1 B1 and calculates the center pixel and the diameter;

- It creates a circular binary mask using the region grow algorithm;

- It applies the mask to the image removing the border.

Building the MP map

To measure the optical density of the MP, the autofluorescence (AF) method uses two excitation wavelengths (blue and yellow) that are differentially absorbed by the MP, accounting thereby for the non-uniform distribution of lipofuscin in the retinal pigment epithelium (RPE).

The algorithm calculates the distribution of the optical density of MP based on the following theory:

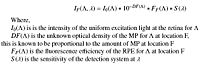

The autofluorescence IF(excitation wavelength, emission wavelength), measured at a given emission wavelength for a given excitation wavelength, for any location F in the field of interest can be derived by following equation:

Equation 2

In equation 1, absorbers other than the MP and fluorophores other than RPE lipofuscin are neglected to simplify the process. Typically, contributions from rhodopsin absorption will confound these measurements. In typical Fundus camera measurements, rhodopsin is bleached within the first flash and so does not affect the measurement. When using varying flashes and sensitivities, it is a good idea to verify that the intensity of the AF image is at steady state, i.e., rhodopsin is bleached.

Note that in practical situations, neither the intensity of light incident on the retina, nor the detection sensitivity is known as they depend upon many factors. Therefore, equation 1 is applied to a reference location P, generally located in the periphery of the field.

Note that in practical situations, neither the intensity of light incident on the retina, nor the detection sensitivity is known as they depend upon many factors. Therefore, equation 1 is applied to a reference location P, generally located in the periphery of the field.

A ratio of two versions of equation 1 applied for two locations F and P creates the following equation 2: